Is an AI arms race more dangerous than a nuclear one? Yes, but not like in ‘Terminator'

27 Jan, 2020 15:14

Fear that the impact of militarised artificial intelligence (AI) on war and international relations could be more deadly than the nuclear arms race is both an exaggeration and an underestimation of the threat this represents.

It is inevitable that the increasing use of AI for military purposes raises the spectre of a Terminator-esque future where computers and autonomous armies of robots conduct wars with little human control or oversight. It might make good headlines and movies. It will also sell books. But this scenario is an exaggeration. It is based upon a fundamental misunderstanding of what AI is and that it will always be human beings, not machines, who make war.

That there is a global AI arms race is a reality we should not ignore. Three countries, in particular, the US, China, and Russia have publicly stated that the building of intelligent machines is now vital to the future of their national security.

China's State Council has a detailed strategy designed to make the country the front-runner and global innovation centre in AI by 2030. The US, the most advanced and vibrant AI developer, does not have a prescriptive roadmap like China's. But the Pentagon has been pursuing a strategy known as the ‘Third Offset,' which aims to give the US, through weapons powered by smart software, the same sort of advantage over potential adversaries that it once held in nuclear bombs and precision-guided weapons. Russia, which lags behind China and the US, began a modernisation programme in 2008. Its Military Industrial Committee has set a target to make 30 percent of military equipment robotic by 2025.

This is significant. But there is an important difference between the AI arms race and the previous nuclear one: the AI arms race has been invigorated by commercial applications, not simply military strategy.

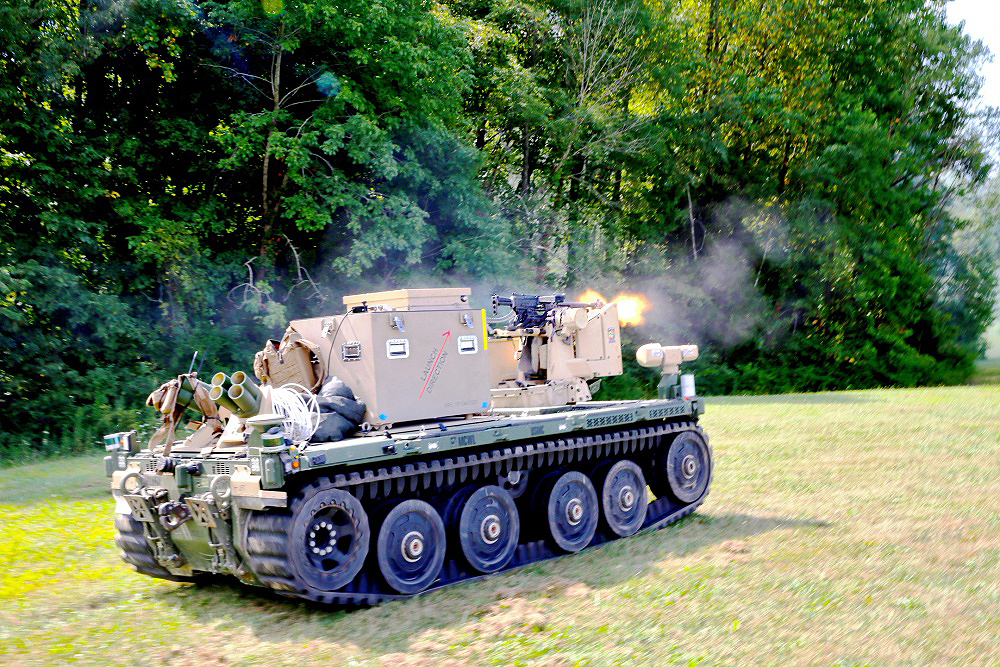

ALSO ON RT.COMPentagon to create ‘AI assistant’ to help tank crews navigate & MAKE DECISIONS in battleFrom self-driving cars to self-driving tanks?

Algorithms developed by start-ups that are good at searching through holiday photos, for example, can easily be repurposed to scour spy satellite imagery. The control software needed for an autonomous vehicle which companies like Google and Uber are working on, is not dissimilar to that required for a driverless tank. Many recent advances in developing and deploying artificial intelligence emerged from research from companies such as Google, not the Pentagon.

This is not without its own problems. Many researchers and scientists are concerned about militarised AI. More than 3,000 researchers, scientists, and executives from companies including Microsoft and Google signed a letter to the Obama administration in 2015 demanding a ban on autonomous weapons.

For competitors like China, this has so far not been an issue. China's AI strategy directly links commercial and defence AI developments. A national lab dedicated to making China more competitive in machine learning, for example, is operated by Baidu, the country's leading search engine.

In other words, China's model of military-civil fusion means their AI research will readily flow from tech firms into the military without the kind of barriers that some Google employees aim to erect in the United States.

In the US, a move to try to ease the potential conflict between the private sector and the military has seen the Pentagon boosting AI spending to help smaller tech companies partner with the military.

What this means in practice is that, unlike the nuclear arms race which was brought about by the Second World War at a time when national resources were pooled to prosecute a war, the AI arms race is being fuelled by huge global commercial opportunities.

Humans still decide when robots shoot

Existing trends of automation, sensor-based industrial monitoring, and algorithmic analysis of business processes have received an enormous boost by new AI techniques — combined with ever faster computing power and the accumulation of years of digitized data — ensuring that computers are now learning the tasks humans require of them rather than merely doing as they're told.

This is not human intelligence. Machine learning will not lead to military robots autonomously making decisions about when to kill or not.

But the growing capability of machines does mark an important evolution. AI-driven computing means machines can now excel at tasks when these are clearly defined. Where designers have prepped them to interpret, as opposed to making decisions on subjects they haven't been exposed to, meaning a powerful adjunct to human cognition can now be incorporated into military power. This is what the AI race is really all about.

There are two unexpected outcomes these developments give rise to which makes the AI arms race both different and potentially more dangerous.

There are two unexpected outcomes these developments give rise to which makes the AI arms race both different and potentially more dangerous.

A weapon anyone can own

While an AI arms race appears to be one that only the big global nations can participate in, this is not strictly true. AI can also make it easier for smaller countries and organisations to threaten the bigger powers.

Nuclear weapons may be easier than ever to build, but they still require investments and resources, technologies, and expertise, all of which are in short supply. But code and digital data gets cheaper and spreads around for free, and quickly. Machine learning is becoming ubiquitous. Image and facial recognition capabilities now crop up in high school science fair projects.

There is another dimension to this. Developments like drone deliveries and autonomous passenger vehicles can easily become powerful tools of asymmetric warfare. Islamic State (IS, formerly ISIS) has used consumer quadcopters to drop grenades on its enemies. And because many of these technologies have been developed for commercial purposes, techniques that can fuel cyberwarfare, for example, they will inevitably find their way into the vibrant black market in hacking tools and services.

There is another dimension to this. Developments like drone deliveries and autonomous passenger vehicles can easily become powerful tools of asymmetric warfare. Islamic State (IS, formerly ISIS) has used consumer quadcopters to drop grenades on its enemies. And because many of these technologies have been developed for commercial purposes, techniques that can fuel cyberwarfare, for example, they will inevitably find their way into the vibrant black market in hacking tools and services.

Unlike the nuclear arms race with its mutual nuclear deterrence which stabilised a military balance of power, AI threatens to create a wild west of differing yet deadly, capabilities.

It also becomes a potential source of instability. If machine learning algorithms can be hacked and fooled to feed incorrect information to humans in command and control structures, trusting these automated systems and algorithms could prove catastrophic.

ALSO ON RT.COMMake no mistake: Military robots are not there to preserve human life, they are there to allow even more endless warsIt also becomes a potential source of instability. If machine learning algorithms can be hacked and fooled to feed incorrect information to humans in command and control structures, trusting these automated systems and algorithms could prove catastrophic.

No casualties means more wars

The second area is in the conduct of future wars. Militarised AI, like more intelligent ground and aerial robots that can support or work alongside troops, means military engagement requires fewer human soldiers — if any at all.

When blown-up computer chips and equipment are sent back from the battlefield, not body bags, the transformation of the domestic politics of war will fundamentally change.

The economic costs of war will always be politically contested. But when war can be conducted without the threat of a domestic backlash to casualties, it changes the nature of modern warfare and removes the political need for a military that is accountable.

The idea that the military in the 21st century will be the least constrained of any generation by domestic politics represents a truly terrifying prospect. It is this political dynamic that suggests that an AI arms race could be more deadly than the nuclear one.

The statements, views and opinions expressed in this column are solely those of the author and do not necessarily represent those of RT.

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home